A little over 3 years ago several of us were introduced to a Swedish company called Anoto via an off-handed comment by Glover Ferguson, Accenture's Chief Scientist, during a visit to the Accenture Research Labs in Chicago. Little did I realize how that single comment would lead to several years of hope and frustration, including the bluetooth scenario outlined previously.

As part of running our R&D initiatives at the Fortune 500 company I work for we came up with a way for our field technicians to utilize some of the same tools they had been using up until now only with updated technologies inside. Specifically the notion of a technician using a pen, phone and paper mapped to a technician using a digital pen, a J2ME phone and Anoto enabled paper.

What's this digital pen and Anoto paper thing? Basically it is a way to print a unique pattern on paper (either on demand or pre-printed) and allow the pen to "know" where it is whenever it writes anything on the paper. If you have seen the FLY pentop computer you have looked at digital pen technology. The pattern on the paper can be made essentially invisible to the human eye and it allows the pen to record the strokes, angle of writing and time a stroke is made. You can then determine if you were in a checkbox on the form or put the strokes together and do handwriting recognition to completely rebuild the form for display to an end user or to interpret the data and make it computer readable/actionable.

This allows us to print out an invoice on a piece of Anoto paper, dispatch a technicain to the home on the printout via a J2ME app that gets notified of work-orders, track the tech with the built-in GPS on the Nextel phones, capture the info on the form as the tech writes it and make it available for our call center or the end-user to see what was done, where and when.

We ran several successful pilots of the technology. We based it on code directly from Anoto, as well as from their partners HP (FAS since discontinued in the US) and also Expedata. The technology works. Both docked and wireless versions were rugged and delivered the experience we expected. However, as is the case for much of the blog you read here, there were so many things that happened in parallel that destroyed any opportunity to reasonably deploy a digital pen solution within our organization that is beyond funny and simply painful for me to remember.

I still think this technology has incredible merit, especially as you deploy the second, third and beyond "apps" via the technology. Contact me if you want to know how/why. After all, I'm a digital monk for a reason.

Core Anoto technology

Saturday, June 10, 2006

Wednesday, June 07, 2006

Use Bluetooth? With a Nextel?

Well then this may fall into the "what have you done for me lately" category.

When I joined my current employer I found out that we are one of the largest users of Nextel phones around. At the same time we had been turned on to a couple of technologies that would really benefit from the use of Bluetooth on a Nextel phone. One of those uses was the Anoto Digital Pen (see post coming soon). The other was for a way for our field technicians to synchronize in the field via a dial-up network connection. The bad part? Nextel didn't have a Bluetooth phone.

We had several meetings discussing how the application we had could run on the J2ME environment already in the i58 (Condor) phone. Discussions about the new phone took place both with Nextel and Motorola. The new phone would offer even more Java capabilities and we asked for Bluetooth and a way for an enterprise to better manage software applications on the phone. We also asked for a large display, rugged capabilites and a candybar design (flips wouldn't last in our techs hands). For whatever reason one of the parties had a real issue incorporating Bluetooth into their phones. They didn't want to do it. Reasons for this ranged from security issues to resource availability. Yet they kept falling farther behind other carriers and they didn't seem to care. Bluetooth to them was an end-user feature for headsets not for data transfer(s) and business use.

After many discussions and examples of use we got much of what we asked for. We provided devices that we wanted to work with the Bluetooth profile being developed and we asked to be kept in the loop on software developments. Sadly the software side didn't quite pan out the way we hoped (automated remote software installation capabilities were missing) and the phone keypad was already locked down by the time we saw an initial prototype and provided our feedback saying it was insufficient but we did have Bluetooth.

So remember me if you have used an i605 with Bluetooth.

When I joined my current employer I found out that we are one of the largest users of Nextel phones around. At the same time we had been turned on to a couple of technologies that would really benefit from the use of Bluetooth on a Nextel phone. One of those uses was the Anoto Digital Pen (see post coming soon). The other was for a way for our field technicians to synchronize in the field via a dial-up network connection. The bad part? Nextel didn't have a Bluetooth phone.

We had several meetings discussing how the application we had could run on the J2ME environment already in the i58 (Condor) phone. Discussions about the new phone took place both with Nextel and Motorola. The new phone would offer even more Java capabilities and we asked for Bluetooth and a way for an enterprise to better manage software applications on the phone. We also asked for a large display, rugged capabilites and a candybar design (flips wouldn't last in our techs hands). For whatever reason one of the parties had a real issue incorporating Bluetooth into their phones. They didn't want to do it. Reasons for this ranged from security issues to resource availability. Yet they kept falling farther behind other carriers and they didn't seem to care. Bluetooth to them was an end-user feature for headsets not for data transfer(s) and business use.

After many discussions and examples of use we got much of what we asked for. We provided devices that we wanted to work with the Bluetooth profile being developed and we asked to be kept in the loop on software developments. Sadly the software side didn't quite pan out the way we hoped (automated remote software installation capabilities were missing) and the phone keypad was already locked down by the time we saw an initial prototype and provided our feedback saying it was insufficient but we did have Bluetooth.

So remember me if you have used an i605 with Bluetooth.

Where were you the week of Aug. 7th 1990?

I was on my way to US Central Command!

As I've described previously, some of the work I was doing at ANL revolved around visualization of wargames. Imagine my surprise when I get a call on a Friday or Saturday of that week telling me to gather my stuff and be on a plane to Tampa. We had developed a number of applications for CENTCOM and also were some of the few that understood NeWS, the window system which some of the visualization tools were written in.

Something to remember is that we had no security clearances. We were called down to a very secure building, where I almost lost my favorite mix tape in a previous visit, where we were always escorted around with folks yelling "Red Badge!" as we walked into rooms with code key locks. This alerted everyone to secure potential secret information from us. We soon found out that what was wanted were some changes to a system that displayed flight sorties and some code of ours that modeled a war game. The major change was in the visualization. Not for the screen but rather for print outs. What they wanted was to have a "driver" to output the maps and symbology of the on-screen visualization to a huge plotter device they had.

If I recall correctly it was an HP plotter and this was our first exposure to PCL. So we were asked to essentialy code our PostScript drawing routines (which was the underlying imaging system utilized by NeWS) to PCL for display. We spent several long days working away. There were no windows in this place and the Lt. Col. that was our chaperone asked us if we wanted something to eat. Sure... Pizza! Little did I know this would be the source of another "first" in my life. The "pizza" arrives. Dominoes, WTH is Dominoes?!? Eat a couple pieces... GACK.. Worst pizza I've ever had in my life! But... It was really late, we were hungry and the food was cheap. We survived.

After several days it was time to head back home. Operation Desert Shield was in full swing. It was exciting to work on the mapping and output routines. It was only after the visit that I found out that the room we passed on the way to the restroom, which was guarded by two guys with rifles, was where Gen. Schwartzkopf was hanging out at the time. The maps we were trying to print out were specifically to present in those briefings. Later I also found the writeup (see link below) which details why some young kids and game programmers etc... were suddenly being called on to help with the planning for something like Desert Shield/Storm.

In the time that passed it was weird to see our friends in the service put on the desert fatigues and load Sun workstations into an air-conditioned trailer that was dropped into Kuwait. Amazing to get emails from our guys with questions about the software we had sent them from the post out there.

Links:

TACWAR/IRAQ/Centcom

Desert ShieldTimeline

As I've described previously, some of the work I was doing at ANL revolved around visualization of wargames. Imagine my surprise when I get a call on a Friday or Saturday of that week telling me to gather my stuff and be on a plane to Tampa. We had developed a number of applications for CENTCOM and also were some of the few that understood NeWS, the window system which some of the visualization tools were written in.

Something to remember is that we had no security clearances. We were called down to a very secure building, where I almost lost my favorite mix tape in a previous visit, where we were always escorted around with folks yelling "Red Badge!" as we walked into rooms with code key locks. This alerted everyone to secure potential secret information from us. We soon found out that what was wanted were some changes to a system that displayed flight sorties and some code of ours that modeled a war game. The major change was in the visualization. Not for the screen but rather for print outs. What they wanted was to have a "driver" to output the maps and symbology of the on-screen visualization to a huge plotter device they had.

If I recall correctly it was an HP plotter and this was our first exposure to PCL. So we were asked to essentialy code our PostScript drawing routines (which was the underlying imaging system utilized by NeWS) to PCL for display. We spent several long days working away. There were no windows in this place and the Lt. Col. that was our chaperone asked us if we wanted something to eat. Sure... Pizza! Little did I know this would be the source of another "first" in my life. The "pizza" arrives. Dominoes, WTH is Dominoes?!? Eat a couple pieces... GACK.. Worst pizza I've ever had in my life! But... It was really late, we were hungry and the food was cheap. We survived.

After several days it was time to head back home. Operation Desert Shield was in full swing. It was exciting to work on the mapping and output routines. It was only after the visit that I found out that the room we passed on the way to the restroom, which was guarded by two guys with rifles, was where Gen. Schwartzkopf was hanging out at the time. The maps we were trying to print out were specifically to present in those briefings. Later I also found the writeup (see link below) which details why some young kids and game programmers etc... were suddenly being called on to help with the planning for something like Desert Shield/Storm.

In the time that passed it was weird to see our friends in the service put on the desert fatigues and load Sun workstations into an air-conditioned trailer that was dropped into Kuwait. Amazing to get emails from our guys with questions about the software we had sent them from the post out there.

Links:

TACWAR/IRAQ/Centcom

Desert ShieldTimeline

Saturday, May 27, 2006

Early Java

As I have mentioned earlier I got into Java early on in the life cycle. In fact the first Java Programming contest had an entry by myself and a co-worker at the time. This is the short version of that story for how we created this.

We had developed some pretty cool GIS applications (See DOOGIS for what they evolved to) in Java but given the type of work we were doing it wasn't deemed appropriate to release those as essentially open source if we submitted them to Sun for this contest. Though we knew about the contest for a long time we decided not to enter it. Then, with just two days before the contest closed (at midnight), we decided on something cool to try. Interactive books on PCs were just becoming the rage (or I was finally paying attention because of my own child) and I thought what a great concept to make this playable over the Internet.

The basic concept was to create a book that had pages. On the page you had a background image with images and actions that overlayed the background as well as enter/exit actions. From this basic concept we could put hot-spots on the page that would play audio, display an image or cause an animation (along a path) to take place. The groundwork was layed. We could have a words highlight and play the word sound, have a character animate along a path, have an obect that was there and after animation disappeared. We had stuff for kids to try and click on and keep them amused.

Now that the basic design was in hand it was time to get started with coding. I layed out an object structure that I thought made sense and along with my partner in crime, Bob, we set forth getting the assets we would need for the book. This included scanning images for the background, cutting images out of those to animate and recording audio that would accompany the book. Everything was going great except for the coding bit. By the end of the first day with only one day left I felt the class structure in place was way too complicated to ever get anything reasonable onscreen, never mind done, by the next day. I was disgusted. I quit. My friend encouraged me that he as sure we could get this done in the 24 hours we had left. I was unsure and went home to sleep on it. I woke up the next day completely rejuvenated! I think I can get this done and besides I didn't want to let my friend down.

Got in early and started coding. The assets were coming along but we didn't have anywhere near enough. We decided to cut the books short and simply demonstrate the concept across several pages rather than a whole book. Only the first two pages would have a "complete" set of capabilities though stuff existed on all pages for the judges to have some fun with (Monty Python and Homer anyone?) Time ticked away and progress was being made but it was going to be really close. Check the contest rules again. Doesn't have to be in until midnight Pacific time! Fantastic! We can make this then. We got everything up and running about 1/2 hour before the deadline and then fought with the submission process up until a couple minutes before it was due and, after an email exchange with some Sun folks, finally got it to work.

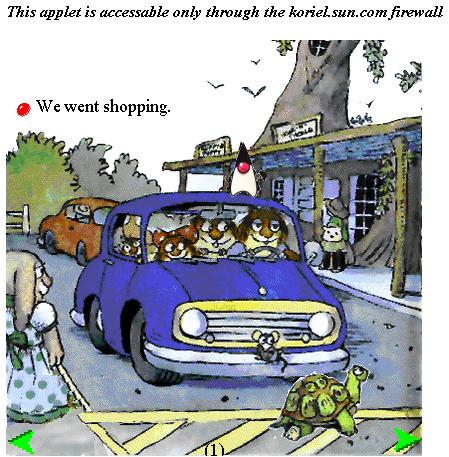

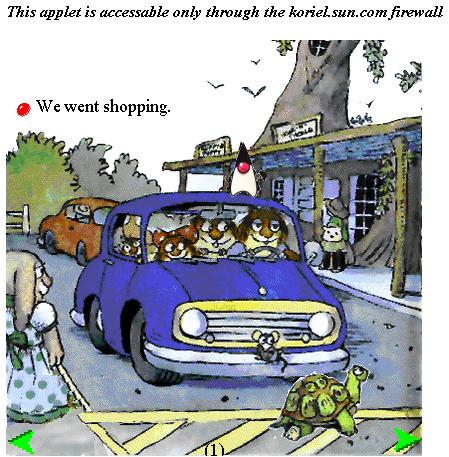

Here's a screenshot of one of the pages:

Here's a screenshot of one of the pages:

On this page we have three animated images. The turtle, Duke, and a bird. The bird and duke follow a "complex" path that is defined as taking some period of time to traverse. A single animation thread handles the movement of all the objects and positions them accordingly. The bird is a "one-shot" item that moves and then is not to be seen again until the page is reloaded. You can click on the words to highlight them and have them read back to you. The page enter action takes care of reading the entire sentence(s) upon landing on the page.

We were delighted to have simply finsihed something that we thought targeted a unique audience and could translate into a real business opportunity for Java. Finallly the results were announced: Honorable Mention! Cool! It took us 24 hours to design and code and we won an honorable mention! Not bad for a days work. It was awesome to be recognized but looking back at it now I feel that had the judges included the target audience, kids, we might have done even better.

Sadly this never evolved into anything bigger (though it does provide grist for the mill). After hunting around a bit I found the class files and lo and behold I tried them in a modern browser with the latest Java plug-in and they still work.

We had developed some pretty cool GIS applications (See DOOGIS for what they evolved to) in Java but given the type of work we were doing it wasn't deemed appropriate to release those as essentially open source if we submitted them to Sun for this contest. Though we knew about the contest for a long time we decided not to enter it. Then, with just two days before the contest closed (at midnight), we decided on something cool to try. Interactive books on PCs were just becoming the rage (or I was finally paying attention because of my own child) and I thought what a great concept to make this playable over the Internet.

The basic concept was to create a book that had pages. On the page you had a background image with images and actions that overlayed the background as well as enter/exit actions. From this basic concept we could put hot-spots on the page that would play audio, display an image or cause an animation (along a path) to take place. The groundwork was layed. We could have a words highlight and play the word sound, have a character animate along a path, have an obect that was there and after animation disappeared. We had stuff for kids to try and click on and keep them amused.

Now that the basic design was in hand it was time to get started with coding. I layed out an object structure that I thought made sense and along with my partner in crime, Bob, we set forth getting the assets we would need for the book. This included scanning images for the background, cutting images out of those to animate and recording audio that would accompany the book. Everything was going great except for the coding bit. By the end of the first day with only one day left I felt the class structure in place was way too complicated to ever get anything reasonable onscreen, never mind done, by the next day. I was disgusted. I quit. My friend encouraged me that he as sure we could get this done in the 24 hours we had left. I was unsure and went home to sleep on it. I woke up the next day completely rejuvenated! I think I can get this done and besides I didn't want to let my friend down.

Got in early and started coding. The assets were coming along but we didn't have anywhere near enough. We decided to cut the books short and simply demonstrate the concept across several pages rather than a whole book. Only the first two pages would have a "complete" set of capabilities though stuff existed on all pages for the judges to have some fun with (Monty Python and Homer anyone?) Time ticked away and progress was being made but it was going to be really close. Check the contest rules again. Doesn't have to be in until midnight Pacific time! Fantastic! We can make this then. We got everything up and running about 1/2 hour before the deadline and then fought with the submission process up until a couple minutes before it was due and, after an email exchange with some Sun folks, finally got it to work.

Here's a screenshot of one of the pages:

Here's a screenshot of one of the pages:On this page we have three animated images. The turtle, Duke, and a bird. The bird and duke follow a "complex" path that is defined as taking some period of time to traverse. A single animation thread handles the movement of all the objects and positions them accordingly. The bird is a "one-shot" item that moves and then is not to be seen again until the page is reloaded. You can click on the words to highlight them and have them read back to you. The page enter action takes care of reading the entire sentence(s) upon landing on the page.

We were delighted to have simply finsihed something that we thought targeted a unique audience and could translate into a real business opportunity for Java. Finallly the results were announced: Honorable Mention! Cool! It took us 24 hours to design and code and we won an honorable mention! Not bad for a days work. It was awesome to be recognized but looking back at it now I feel that had the judges included the target audience, kids, we might have done even better.

Sadly this never evolved into anything bigger (though it does provide grist for the mill). After hunting around a bit I found the class files and lo and behold I tried them in a modern browser with the latest Java plug-in and they still work.

Saturday, May 20, 2006

The SonyStation that could have been

I have quite a history with Sun and Java starting with my time at ANL. During the end of my time at ANL as I was looking to start a companyor join a startup related to Java technologies I managed to hook up with a couple of very creative folks in California. One of them had worked on interactive television technologies at GTE and the other had worked at web development houses such as Atomix. Together we decided to form a virtual company and because of the contacts of one of the team we got a private invite, along with several other teams, to present to Sony a proposal for how we would develop the Jeopardy license for on-line play. We broke up duties with myself taking on the task of the programming of the game engine while others looked at the assets for the game, the management and day to day interactions, and we all colloborated on the overall experience.

The overall concept was pretty huge but given the tight deadline for a demo, we were last to join the invitee list, we decided to focus strictly on the gameplay aspects. To give you some background though: The concept had users connecting to a virtual "green room". Here you could basically choose your gameplay avatar, "mingle" in groups that you could group chat with, select these groups (of up to 3 players) to initiate a game of Jeopardy with. Points would be collected and prizes, etc... could be won.

Okay... How was gameplay to be handled. At the time I decided that the best way to play against other players on the internet would be to download a Java applet to the users computer and they would communicate with a centralized game server for their questions. I chose this route as JavaScript and browser based DOM support wasn't really effective at the time and the only other real alternative was to do it server based, which was just yuck! So Java it was. Now since the goal of Jeopardy was to allow folks to play for real prizes we needed to overcome two significant hurdles. How do you allow people to play on all manner of connections and second, which came about because of the way the design of the game had it playing, how do you hide the questions and answers on the wire so that folks couldn't cheat.

Realize that at this time I had never heard of network Doom or anything else that was related to network game play. Stupid I guess but that was just where I was at in life. The answer to the first question had me write some code that essentially had constant communications with the server which calculated average "ping" times for messages that went back and forth. The game played by showing the user a question, a timer counted up a period of time to allow all users to read the question and then a countdown during which a user could click (or press a number key) on an answer to select it as their choice. Because some users would be on T1 lines and others on 28K or 56K modems we needed to allow for one connection to have sent the questions and timers started while the others were still downloading. All timing was driven by time after the countdown started with the average ping time used as a "slop" indicator. The other issue was in preventing folks from intercepting the question and answer on the wire and programmatically having a bot play. Since we had low end PCs and I didn't have a cryptography background then we delayed sending the answers until the very last second. Terrible in hindsight but for a while it would have worked as we had many many questions that we could alternate through before a bot could learn then all and their many different answers. It's harder to discuss than to implement and it worked really, really well for this scenario.

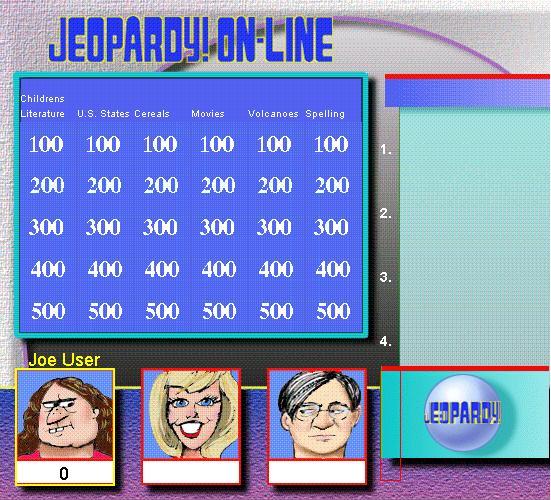

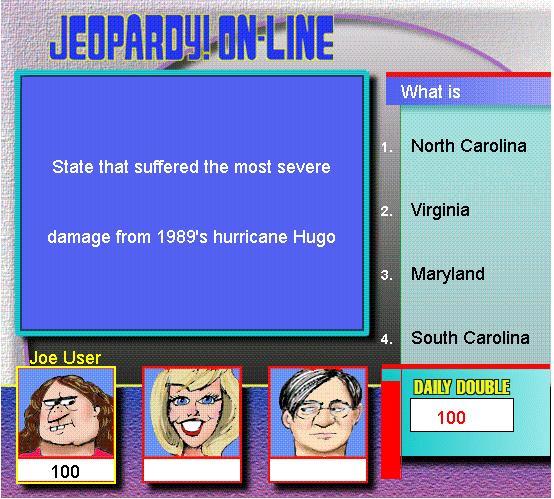

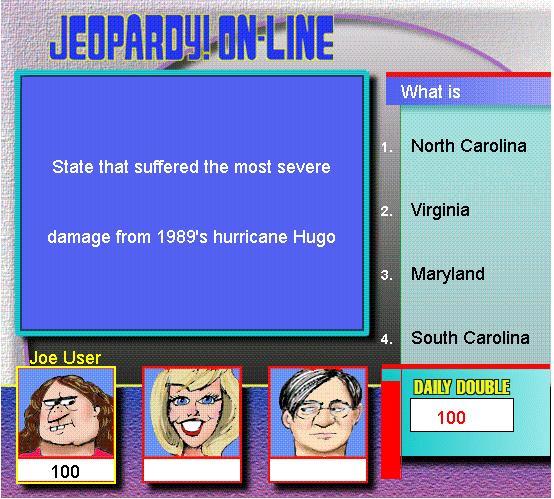

Here's a screen shot of the game we delivered at the first screen. Control of the board, shown by the yellow outline, was shown to each player and would move around during gameplay. The screenshot below is from a single user game, in multi-user mode it would show the name of every player.

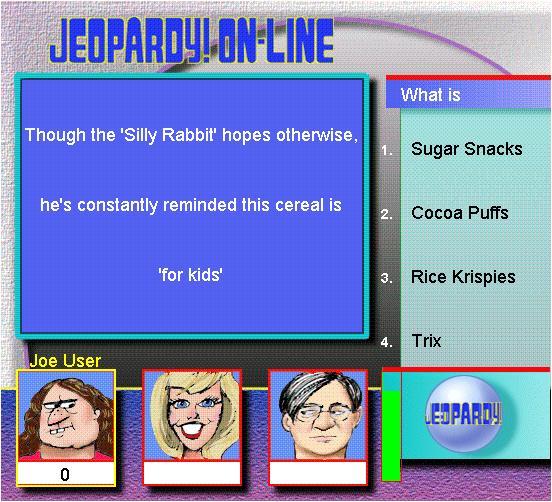

This screenshot shows the board after selecting one of the squares above. This shows the countdown (green to yellow to red) under way. While the question is meant to be read the bar grows "up" while colored red. Then shifts to green and counts down.

We even included the concept of daily doubles. Other users would simply see a screen showing they were locked out of the competition at the time and then play would resume. All users would get to see the correct answer at the end of each round as it would be colored a dark blue.

The overall concept was pretty huge but given the tight deadline for a demo, we were last to join the invitee list, we decided to focus strictly on the gameplay aspects. To give you some background though: The concept had users connecting to a virtual "green room". Here you could basically choose your gameplay avatar, "mingle" in groups that you could group chat with, select these groups (of up to 3 players) to initiate a game of Jeopardy with. Points would be collected and prizes, etc... could be won.

Okay... How was gameplay to be handled. At the time I decided that the best way to play against other players on the internet would be to download a Java applet to the users computer and they would communicate with a centralized game server for their questions. I chose this route as JavaScript and browser based DOM support wasn't really effective at the time and the only other real alternative was to do it server based, which was just yuck! So Java it was. Now since the goal of Jeopardy was to allow folks to play for real prizes we needed to overcome two significant hurdles. How do you allow people to play on all manner of connections and second, which came about because of the way the design of the game had it playing, how do you hide the questions and answers on the wire so that folks couldn't cheat.

Realize that at this time I had never heard of network Doom or anything else that was related to network game play. Stupid I guess but that was just where I was at in life. The answer to the first question had me write some code that essentially had constant communications with the server which calculated average "ping" times for messages that went back and forth. The game played by showing the user a question, a timer counted up a period of time to allow all users to read the question and then a countdown during which a user could click (or press a number key) on an answer to select it as their choice. Because some users would be on T1 lines and others on 28K or 56K modems we needed to allow for one connection to have sent the questions and timers started while the others were still downloading. All timing was driven by time after the countdown started with the average ping time used as a "slop" indicator. The other issue was in preventing folks from intercepting the question and answer on the wire and programmatically having a bot play. Since we had low end PCs and I didn't have a cryptography background then we delayed sending the answers until the very last second. Terrible in hindsight but for a while it would have worked as we had many many questions that we could alternate through before a bot could learn then all and their many different answers. It's harder to discuss than to implement and it worked really, really well for this scenario.

Here's a screen shot of the game we delivered at the first screen. Control of the board, shown by the yellow outline, was shown to each player and would move around during gameplay. The screenshot below is from a single user game, in multi-user mode it would show the name of every player.

This screenshot shows the board after selecting one of the squares above. This shows the countdown (green to yellow to red) under way. While the question is meant to be read the bar grows "up" while colored red. Then shifts to green and counts down.

We even included the concept of daily doubles. Other users would simply see a screen showing they were locked out of the competition at the time and then play would resume. All users would get to see the correct answer at the end of each round as it would be colored a dark blue.

We were able to present this information in a formal presentation to the folks in charge of SonyStation at Sony. In fact I was able to play against the VP at Sony from my house over my modem. Since I developed the application I knew the proper questions and answers and so I was sandbagging a bit to let the VP win. I quickly found out he was very very good and I would need to actually work a bit to show him how the multi-user feature worked! :-D

In the end, in keeping with the gestalt of this entire blog, we lost out. Supposedly we made the cut to the final two or three companies but the official explaination was that because we weren't a real compnay but only a virtual one they couldn't select us. We told them select us and we'll become real but they did not bite. I waited to see what would ultimately launch and lets just say that more than 6-9 months later they launched a version of Jeopardy that I thought was simply horrid. A server based version that didn't allow real interaction thouh it did grade you based on number of right/wrong answers. It didn't have the green room concept either. *Sigh*

Edit: I've actually still got the applet and server code to make this work and it runs in a current environment but it requires too much manual intervention to accomplish the link-up to post a link. Sorry... :-(

In the end, in keeping with the gestalt of this entire blog, we lost out. Supposedly we made the cut to the final two or three companies but the official explaination was that because we weren't a real compnay but only a virtual one they couldn't select us. We told them select us and we'll become real but they did not bite. I waited to see what would ultimately launch and lets just say that more than 6-9 months later they launched a version of Jeopardy that I thought was simply horrid. A server based version that didn't allow real interaction thouh it did grade you based on number of right/wrong answers. It didn't have the green room concept either. *Sigh*

Edit: I've actually still got the applet and server code to make this work and it runs in a current environment but it requires too much manual intervention to accomplish the link-up to post a link. Sorry... :-(

Tuesday, May 16, 2006

AJAX

As I look at all the hype surrounding AJAX and Web 2.0 I can't help but think back on the work we did with Device Mosaic and specificially DM 4.0.

With DM 4.0 we set out to create a "modern" browser, which back then meant IE 5.0, that could run in a very tight footprint. IE 5.0 had a number of features that really enabled dynamic HTML as never before. Since we were creating a brand new HTMl handler we decided we wanted support for many of those. As we relied on HTML to render our UI as well, the UIM as we called it, we also planned on using it and making it a selling point to device manufacturers for customization.

Our pitch allowed device manufacturers and their end-users to create a dynamic applications in a "walled garden" environment utilizing these standard features. It landed us a professional services engagement with TiVO. Contrary to some information out there we didn't port DM to Linux because of TiVO. In fact we saw that Linux was becoming popular and "free" so it might be included in more devices. As such we chose it as one of our reference platforms with the Win32 platform being the default due to the capabilities of the debugging tools. In any case, we were selling to TiVO and they wanted to see if they could convert their UI to run with DHTML inside of DM. This would require DM to be able to run JavaScript code and load data from a database to change the UI. We actually were able to mock up quite a bit of the UI utilizing the features of the DM. Not only was it a mock-up but it was very close to existing interface not some horrid hack. There were still many things to add/fix, such as an audio handler to play sounds on cue but with this demonstration we were able to sell TiVO on DM.

Now this wasn't all XML based and we didn't create/utilize XMLHttp but the DHTML and the concepts for getting the data dynamically, and utilzing the event models and JavaScript data structures to create a more compelling interface was defintely there. We utilized similar capabilities in DM for other projects as well but I never saw much similar usage elsewhere. In fact for years after leaving Spyglass and joining another web based startup I was constantly depressed by the UIs that our web applications had. There was so much more that could be done with IE and JS even back then but almost no-one did. In fact I was amazed when our Jeopardy Java applet lost out to a server based HTML app that utilized minimal JS on SonyStation (boy is that another story) rather than a DHTML enabled app. It's nice to see AJAX and Web 2.0 finally bringing these capabilities to the mainstream.

Read some background on TiVO utilizing DM here.

With DM 4.0 we set out to create a "modern" browser, which back then meant IE 5.0, that could run in a very tight footprint. IE 5.0 had a number of features that really enabled dynamic HTML as never before. Since we were creating a brand new HTMl handler we decided we wanted support for many of those. As we relied on HTML to render our UI as well, the UIM as we called it, we also planned on using it and making it a selling point to device manufacturers for customization.

Our pitch allowed device manufacturers and their end-users to create a dynamic applications in a "walled garden" environment utilizing these standard features. It landed us a professional services engagement with TiVO. Contrary to some information out there we didn't port DM to Linux because of TiVO. In fact we saw that Linux was becoming popular and "free" so it might be included in more devices. As such we chose it as one of our reference platforms with the Win32 platform being the default due to the capabilities of the debugging tools. In any case, we were selling to TiVO and they wanted to see if they could convert their UI to run with DHTML inside of DM. This would require DM to be able to run JavaScript code and load data from a database to change the UI. We actually were able to mock up quite a bit of the UI utilizing the features of the DM. Not only was it a mock-up but it was very close to existing interface not some horrid hack. There were still many things to add/fix, such as an audio handler to play sounds on cue but with this demonstration we were able to sell TiVO on DM.

Now this wasn't all XML based and we didn't create/utilize XMLHttp but the DHTML and the concepts for getting the data dynamically, and utilzing the event models and JavaScript data structures to create a more compelling interface was defintely there. We utilized similar capabilities in DM for other projects as well but I never saw much similar usage elsewhere. In fact for years after leaving Spyglass and joining another web based startup I was constantly depressed by the UIs that our web applications had. There was so much more that could be done with IE and JS even back then but almost no-one did. In fact I was amazed when our Jeopardy Java applet lost out to a server based HTML app that utilized minimal JS on SonyStation (boy is that another story) rather than a DHTML enabled app. It's nice to see AJAX and Web 2.0 finally bringing these capabilities to the mainstream.

Read some background on TiVO utilizing DM here.

Wednesday, May 10, 2006

Browsers Everywhere

Nintendo and Sony have begun to put browsers into their videogame consoles and handhelds. It is interesting to see this trend because I happened to work at Spyglass, you know... the Mosaic folks, at a time when they had to fundamentally shift their market or disappear. We shifted to a strategy of supporting embedded devices. One of the customers at that time of our code was Datel who just so happened to port the code to the N64. That was circa 1999 or so... flash forward and what is old is new again.

To celebrate that time though and the concept of embedding browsers in devices I'd like to talk about another port (one we actually participated in unlike the N64 work) that we did and why it was very, very cool for the time. The IBM Network Station network computer. Network computers were going to be the next big thing. Client-server computing taken to the extreme these NCs were going to allow everybody in a corporation to have a computer on their desk. These NCs would run simple applications on their own but also provide green screen emulation, X capabilities for running applicaitons off UNIX servers and a Java JVM that would allow various applications to be pushed down and executed. They didn't take over the world but several shipped and had a modicum of success.

Our involvement with the IBM Network Station began when Spyglass was approached to see if we could get the browser running on their hardware. I'm convinced at this time that the approach was a bargaining tactic by IBM with Netscape, errr.... Navio and their browser. The challenge they laid in front of us was to have a functioning port of the browser in a week or two's time. Now this was in the days prior to our Device Mosaic strategy. The only viable codebases we had were our Windows and Unix ones. The code at the time had many, many issues with memory leaks and crashes and getting to fit on an 8MB NC was sure going to be a challenge. There were two of us on the team George and myself. We were both founding members of the professional services group at Spyglass and were assigned the task of getting this done.

Brief background on why a port: The NC was managed remotely. To accomplish this IBM felt that a browser based interface needed to be made available. Therefore a browser that ran on the device itself to allow a user to browse to the appropriate pages on the server and configure the browser was required. It would also allow people within the company to run several compute intesive tasks without utilzing a server CPU like Citrix but rather all locally.

History complete it became immediately apparent that our Unix codebase would make the best starting point. Why? Well because it turned out that the IBM NC was really nothing more than a gussied up NCD X terminal and the X terminal ran a variant of BSD. The problems started arising when we got the build environment and found that there were no function definitions in the header files. This caused the build to spew thousands of warnings without any real clue as to what could cause or lead to real errors vs. a function simply not being defined. Finding a real problem in this output was an exteremely tedious process.

Within the span of a couple of days though the makefiles and code had been tweaked and we got it to compile. Running the app was another matter. The kernel the NC ran was optiimized for memory. As such it did include all the functions you would expect in either the C runtime or within Xlib, Xt, or Xm. It included the ones that NCD had previously used and found useful in their apps but that was missing a number of functions that a browser needed to rely on. After getting a couple spins of the kernel we finally had an executable that would run and a kernel that could provide the appropriate services. We were absolutely delighted to watch our browser start up and run on such a low end device. It couldn't do much at the time but it provided enough ammunition for IBM, which had a hard requirement to ship an 8MB system with browser, to oust Navio as the default browser and install the Spyglass browser instead!

Little did we realize the headaches that were still to come. We were already somewhat aware of the instability and memory issues that would need to be fixed but were still quite ignorant the extent of that work. IBM wanted, nay needed, JavaScript to function within the browser and we had nothing at that point. IBM also wanted Java integration within the browser (a large part of their sales pitch for the NC) and we had a rudimentary integration on Unix but to make a browser and Java run in 8MB?!?

We divided up the work and brought on many more engineers from around the company. The two of us got it sold but now we needed to mobilize the entire company to deliver such a comprehensive solution. We acquired the source to Microsoft JScript, hey there is something useful in dealing with MS at the time, and one person took responsibility to create a COM interface for our browser code to access the JScript engine. We had folks working on exposing the DOM required to support the IBM application. It all culimated in a team of engineers from PS and core descending on our headquarters for Mosaic development in Champaign for a final march to get everything done and integrated. It ended after two 90 hour weeks with most things integrated and functioning to a point where we could again seperate and wrap up the rest of the work back at our respective home bases.

There were several notable memories of that march but several leap out as being more memorable than others.

In the end the IBM NC shipped with 8 MB "standard" and our browser as the default browser though Navio browser was available with larger memory configs. A 16 MB version was required to run Java applications and have Java integrated with the browser (through a custom shared memory interface). We integrated JScript with our browser and had enough of a DOM done to accomplish many many cool things. We started the process of really profiling our code to both improve performance and reduce memory consumption and we added a substantial amount of stability in low-memory conditions. These changes helped set the foundation for when our transition to a device oriented low-footprint browser took place.

Here's the little certificate we got when it shipped. It was supposedly the first product to ship for all four systems at the same time:

All that for what, as I described earlier, was an initial 3 or 4 days of work to get the business!

There are many, many interesting Spyglass stories to tell. Working on a STB browser with VOD functionality built-in. Working on a remote browser for interactive TV applications and hotels. Creating a proof-of-concept of a remote Java application server. Designing a next generation browser (DM 4.0) that could run on a device and offer the capabilities of the, then new, IE5.

Wiki IBM NC Info

Some IBM NC info

To celebrate that time though and the concept of embedding browsers in devices I'd like to talk about another port (one we actually participated in unlike the N64 work) that we did and why it was very, very cool for the time. The IBM Network Station network computer. Network computers were going to be the next big thing. Client-server computing taken to the extreme these NCs were going to allow everybody in a corporation to have a computer on their desk. These NCs would run simple applications on their own but also provide green screen emulation, X capabilities for running applicaitons off UNIX servers and a Java JVM that would allow various applications to be pushed down and executed. They didn't take over the world but several shipped and had a modicum of success.

Our involvement with the IBM Network Station began when Spyglass was approached to see if we could get the browser running on their hardware. I'm convinced at this time that the approach was a bargaining tactic by IBM with Netscape, errr.... Navio and their browser. The challenge they laid in front of us was to have a functioning port of the browser in a week or two's time. Now this was in the days prior to our Device Mosaic strategy. The only viable codebases we had were our Windows and Unix ones. The code at the time had many, many issues with memory leaks and crashes and getting to fit on an 8MB NC was sure going to be a challenge. There were two of us on the team George and myself. We were both founding members of the professional services group at Spyglass and were assigned the task of getting this done.

Brief background on why a port: The NC was managed remotely. To accomplish this IBM felt that a browser based interface needed to be made available. Therefore a browser that ran on the device itself to allow a user to browse to the appropriate pages on the server and configure the browser was required. It would also allow people within the company to run several compute intesive tasks without utilzing a server CPU like Citrix but rather all locally.

History complete it became immediately apparent that our Unix codebase would make the best starting point. Why? Well because it turned out that the IBM NC was really nothing more than a gussied up NCD X terminal and the X terminal ran a variant of BSD. The problems started arising when we got the build environment and found that there were no function definitions in the header files. This caused the build to spew thousands of warnings without any real clue as to what could cause or lead to real errors vs. a function simply not being defined. Finding a real problem in this output was an exteremely tedious process.

Within the span of a couple of days though the makefiles and code had been tweaked and we got it to compile. Running the app was another matter. The kernel the NC ran was optiimized for memory. As such it did include all the functions you would expect in either the C runtime or within Xlib, Xt, or Xm. It included the ones that NCD had previously used and found useful in their apps but that was missing a number of functions that a browser needed to rely on. After getting a couple spins of the kernel we finally had an executable that would run and a kernel that could provide the appropriate services. We were absolutely delighted to watch our browser start up and run on such a low end device. It couldn't do much at the time but it provided enough ammunition for IBM, which had a hard requirement to ship an 8MB system with browser, to oust Navio as the default browser and install the Spyglass browser instead!

Little did we realize the headaches that were still to come. We were already somewhat aware of the instability and memory issues that would need to be fixed but were still quite ignorant the extent of that work. IBM wanted, nay needed, JavaScript to function within the browser and we had nothing at that point. IBM also wanted Java integration within the browser (a large part of their sales pitch for the NC) and we had a rudimentary integration on Unix but to make a browser and Java run in 8MB?!?

We divided up the work and brought on many more engineers from around the company. The two of us got it sold but now we needed to mobilize the entire company to deliver such a comprehensive solution. We acquired the source to Microsoft JScript, hey there is something useful in dealing with MS at the time, and one person took responsibility to create a COM interface for our browser code to access the JScript engine. We had folks working on exposing the DOM required to support the IBM application. It all culimated in a team of engineers from PS and core descending on our headquarters for Mosaic development in Champaign for a final march to get everything done and integrated. It ended after two 90 hour weeks with most things integrated and functioning to a point where we could again seperate and wrap up the rest of the work back at our respective home bases.

There were several notable memories of that march but several leap out as being more memorable than others.

- Fighting with a bug in the compiler for the NC because it misgenerated code for passing certain types of structures by reference. This was only found by finding the address of the code module that was where we died (debugger was woefully inadequate for this) and then reading assembly code produced by the compiler to see what was happening.

- Chasing an memory issue in our allocator. We replaced the C++ new in the MS JScript implementation to allocate out of our own memory blocks that we manage with our own macros. Chasing a bug where the allocator somehow caused an allocation or a free to occur on a non 4 byte boundry causing an instant bus error. This was found after an all-nighter when a rescan of the original MS build environment found a flag that forced 4 byte allocations in the JScript engine. *sigh*

- Needing a change in the core browser by the engineer responsible. Coming in in the AM, waiting for the engineer, telling them about the change first thing. Going back to check on status around 4:00 PM but he's gone. Making chage ourselves. Continue working other issues go sleep for 4 hours and beat engineer back into work by a couple hours. Some folks didn't get the urgency at all. :-(

- Listening to streaming internet radio. I bought my first CDs because of listening to them for hours on end while coding (take that RIAA). I bought Tangerine Dream: The Dream Mixes and the Mortal Kombat soundtrack because of that time.

- Playing ping-pong and pool after midnight in the back room/garage trying to stay awake and clear my head to think a little more clearly.

- Threating to quit if the march didn't end as the hard goals that required being together had been accomplished and everything else would better be handled at home.

In the end the IBM NC shipped with 8 MB "standard" and our browser as the default browser though Navio browser was available with larger memory configs. A 16 MB version was required to run Java applications and have Java integrated with the browser (through a custom shared memory interface). We integrated JScript with our browser and had enough of a DOM done to accomplish many many cool things. We started the process of really profiling our code to both improve performance and reduce memory consumption and we added a substantial amount of stability in low-memory conditions. These changes helped set the foundation for when our transition to a device oriented low-footprint browser took place.

Here's the little certificate we got when it shipped. It was supposedly the first product to ship for all four systems at the same time:

All that for what, as I described earlier, was an initial 3 or 4 days of work to get the business!

There are many, many interesting Spyglass stories to tell. Working on a STB browser with VOD functionality built-in. Working on a remote browser for interactive TV applications and hotels. Creating a proof-of-concept of a remote Java application server. Designing a next generation browser (DM 4.0) that could run on a device and offer the capabilities of the, then new, IE5.

Wiki IBM NC Info

Some IBM NC info

Tuesday, May 02, 2006

sys/net/ethertypes.h

What is so interesting about the 13th and 14th octets packet and how does it relate to anything I may have to say?!

If you are on a Unix based system you may want to have a look at ethertypes.h. Scan down to find 0x1989 and look at it. ETHERTYPE_DOGFIGHT. And the comment may say something to the effect of: /* Artificial Horizons ("Aviator" dogfight simulator [on Sun]) */

History first

Part 1: The military

During my time at ANL one of projects I worked on was some application work for CENTCOM. That is the United States Central Command. We've sadly heard alot about them since when I first did this work for them. They are the military folks with responsibilty for the middle east. The general public probably really became aware of them with the invasion of Kuwait and the continued conflicts in Iraq. But so what? Well CENTCOM is located at MacDill AFB and that just so happened to be, until around 1993, the home to an F16 training sqaudron.

Part 2: The technology

As our work on Sun workstations was proceeding there was a revolution going on in the workstation space at that time. Things like the SPARC processor and RISC computing in general were improving performance of desktop workstations and the love affair we still have (Nvidia and ATI?!?) with graphics was starting to come to the fore on workstations. Sun had developed their first accelerated 2D and simple 3D framebuffer the Low End Graphics Option (LEGO) graphics card called the GX when it finally debuted. This framebuffer was fabulous for the improved shared memory blitting and other line/area accelerations it offered and also the ability to draw flat shaded polygons really fast (for the time).

Couple a 10 MIPS SPARC processor with a GX to produce a flight simulator that simply rocked.

Aviator

There really isn't a lot of the history Aviator that I can recall. I recall we got a demo of the GX when it was still in development and we started to plan how to utilize all the great features of the new framebuffer on our existing 2D mapping applications. Then we were given some software that had been written as a demo for the card. It was a flight simulator that modeled an FA/18, X29 and 727(?). It utilized DTED terrain data for the display and it allowed you to fly one of these airplanes over the Hawaiian islands. In fact it let you enter your own physics data into a file that it would read to create your own plane. Not only that but best of all it let you duke it out over the LAN in a dogfight! Remember this is around 1989 or 1990. LAN Multi-player DOOM was still 3 or 4 years away.

Result

So I got to play against my co-workers and we developed a number of strategies for flying these things. We actually got pretty good at this. Then as our CENTCOM work brought us down to MacDill we noticed that they finally acquired a number of SparcStations with GX graphics cards. We loaded Aviator and it was now possible for me to fly against a real pilot. Real dogfighting against real aviators! We did a number of trials against a couple of different aviators. One machine in one room the other just around the corner in another. We could taunt each other by speaking loudly but couldn't "cheat" and see our opponents screen.

It was a riot! We won most of the engagements against the real aviators. We were all pretty close in age and we had a blast being the desk jockeys and nerds that opened a can of whoop-a** on them. Oh sure they whined about the physics being unrealistic and that manuever never being possible in a real plane but that didn't change the fact that they were "dead" and we had won! :-D

Sadly I know of no screen shots of the game available anywhere. I don't know what happened to the folks who created Aviator. I think I heard that one of the creators of the GX board went on to co-found Nvida but don't know how accurate that is. The simulator kind of dropped off the face of the earth.. except for ethertypes.h! It's a shame really because it brought a taste of the Evans and Sutherland type emulators to a much larger audience.

More on adventures with US CENTCOM and US SOCOM later.

If you are on a Unix based system you may want to have a look at ethertypes.h. Scan down to find 0x1989 and look at it. ETHERTYPE_DOGFIGHT. And the comment may say something to the effect of: /* Artificial Horizons ("Aviator" dogfight simulator [on Sun]) */

History first

Part 1: The military

During my time at ANL one of projects I worked on was some application work for CENTCOM. That is the United States Central Command. We've sadly heard alot about them since when I first did this work for them. They are the military folks with responsibilty for the middle east. The general public probably really became aware of them with the invasion of Kuwait and the continued conflicts in Iraq. But so what? Well CENTCOM is located at MacDill AFB and that just so happened to be, until around 1993, the home to an F16 training sqaudron.

Part 2: The technology

As our work on Sun workstations was proceeding there was a revolution going on in the workstation space at that time. Things like the SPARC processor and RISC computing in general were improving performance of desktop workstations and the love affair we still have (Nvidia and ATI?!?) with graphics was starting to come to the fore on workstations. Sun had developed their first accelerated 2D and simple 3D framebuffer the Low End Graphics Option (LEGO) graphics card called the GX when it finally debuted. This framebuffer was fabulous for the improved shared memory blitting and other line/area accelerations it offered and also the ability to draw flat shaded polygons really fast (for the time).

Couple a 10 MIPS SPARC processor with a GX to produce a flight simulator that simply rocked.

Aviator

There really isn't a lot of the history Aviator that I can recall. I recall we got a demo of the GX when it was still in development and we started to plan how to utilize all the great features of the new framebuffer on our existing 2D mapping applications. Then we were given some software that had been written as a demo for the card. It was a flight simulator that modeled an FA/18, X29 and 727(?). It utilized DTED terrain data for the display and it allowed you to fly one of these airplanes over the Hawaiian islands. In fact it let you enter your own physics data into a file that it would read to create your own plane. Not only that but best of all it let you duke it out over the LAN in a dogfight! Remember this is around 1989 or 1990. LAN Multi-player DOOM was still 3 or 4 years away.

Result

So I got to play against my co-workers and we developed a number of strategies for flying these things. We actually got pretty good at this. Then as our CENTCOM work brought us down to MacDill we noticed that they finally acquired a number of SparcStations with GX graphics cards. We loaded Aviator and it was now possible for me to fly against a real pilot. Real dogfighting against real aviators! We did a number of trials against a couple of different aviators. One machine in one room the other just around the corner in another. We could taunt each other by speaking loudly but couldn't "cheat" and see our opponents screen.

It was a riot! We won most of the engagements against the real aviators. We were all pretty close in age and we had a blast being the desk jockeys and nerds that opened a can of whoop-a** on them. Oh sure they whined about the physics being unrealistic and that manuever never being possible in a real plane but that didn't change the fact that they were "dead" and we had won! :-D

Sadly I know of no screen shots of the game available anywhere. I don't know what happened to the folks who created Aviator. I think I heard that one of the creators of the GX board went on to co-found Nvida but don't know how accurate that is. The simulator kind of dropped off the face of the earth.. except for ethertypes.h! It's a shame really because it brought a taste of the Evans and Sutherland type emulators to a much larger audience.

More on adventures with US CENTCOM and US SOCOM later.

Tuesday, April 25, 2006

NeXT: Not just GIS

Though GIS has been a part of my career since the early days that 's not the only thing I've had a deep or interesting involvement in. In my early days most of the work I did revolved around UNIX systems such as PDP11s, VAX 11/780s and Sun workstations. However for some work the new Mac Plus SE Platinum was just the ticket (first resume was written with it) and back in the same lab that I used for that had a Xerox Smalltalk machine as well. I never used that much but it, the Mac, and the fact that my advisor was heavily into CHI (Computer Human Interaction) certainly opened my eyes to the "power" of user interfaces.

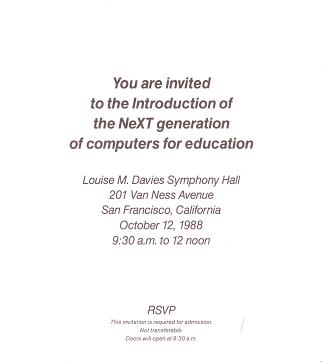

Flash forward a bit to 1988. I'm at ANL and one of the other folks I work with and had been at school with has a friend going to work for NeXT. We are aware of NeXT and Steve Jobs from the Apple days. He is promising a Unix based workstation for education with UI features and capabilities above and beyond the Mac. Who wouldn't want in on some of that? On a cold day over Christmas break 1988 we await the first shipments of NeXT Cubes to Chicago outside the University of Chicago. One of those machines is "ours". We are delighted when one cube with NeXTStep (I think that was the capitalization at the time) 0.6 arrives!

After opening it up we can't help but be excited by the interface, a dictionary service on every machine, Display Postscript, the dock and the "black hole"! We were blown away. So much so that we "invented" a color version of NeXTStep before NeXT did (another story). Not only could we see the value of this computer for education but also for the types of projects we were undertaking. A client/server window system. Resolution independent text/graphics drawing. World on an Optical drive (Useful even today. Think SIM chips for phones and other systems that carry preferences around on a flash based medium). We decided to set out to create some original applications to the Cube and also to port some of our existing applications to the cube.

But this post isn't about any of that work (again something for later) but rather this: We had so bought into the "reality distortion field" around the product that we simply could not wait for this. Imagine my delight at getting this.

Yeah okay, completely a geek moment. But I still found it qite cool!

Flash forward a bit to 1988. I'm at ANL and one of the other folks I work with and had been at school with has a friend going to work for NeXT. We are aware of NeXT and Steve Jobs from the Apple days. He is promising a Unix based workstation for education with UI features and capabilities above and beyond the Mac. Who wouldn't want in on some of that? On a cold day over Christmas break 1988 we await the first shipments of NeXT Cubes to Chicago outside the University of Chicago. One of those machines is "ours". We are delighted when one cube with NeXTStep (I think that was the capitalization at the time) 0.6 arrives!

After opening it up we can't help but be excited by the interface, a dictionary service on every machine, Display Postscript, the dock and the "black hole"! We were blown away. So much so that we "invented" a color version of NeXTStep before NeXT did (another story). Not only could we see the value of this computer for education but also for the types of projects we were undertaking. A client/server window system. Resolution independent text/graphics drawing. World on an Optical drive (Useful even today. Think SIM chips for phones and other systems that carry preferences around on a flash based medium). We decided to set out to create some original applications to the Cube and also to port some of our existing applications to the cube.

But this post isn't about any of that work (again something for later) but rather this: We had so bought into the "reality distortion field" around the product that we simply could not wait for this. Imagine my delight at getting this.

Yeah okay, completely a geek moment. But I still found it qite cool!

Monday, April 24, 2006

GIS... GIS... Wherefore art thou GIS?

Without my ever even really realizing it Geographic Information Systems (GIS) have been part of my background from the start of my working career. Please though, don't be like the rest of the hoi polloi and call anything with a map GIS. Oh no! There are plenty of vendors out there that cringe when you say "GIS" and they reply with a snooty... "mapping is not GIS". Welcome to the new world where a mashup is "GIS".

OK, that bit of soap box behind me:

I actually started working with maps back at ANL when I joined the Visual Interfaces Section. Our little part of ANL wasn't like you would think of a government lab. We weren't handed money from a line item that made it into the US Budget. Nope, we were almost exactly like consultants. So we went out in search of organizations within the government, DoE and DoD, that had line item funding and were looking for how to spend it.

Enter our group, which convinced a number of folks that the new affordable computer craze (Sun workstations were starting to be seen as reasonable computing platforms) could help them visualize the reams of tabular data output from their models into more concise visualziations on maps. The military had models like TACWAR and AEM that took many input files and generated a model of a conflict between forces. Our idea: Put icons on maps and let you click on the icons and present the tabular information in windows that popped up as a result of those clicks. Cool!

Back in those days our development environment consisted of running SunView and using either vi in a shell window or textedit. Sad to say I had not yet been exposed to Emacs and the benefits of integrated GDB debugging. However Sun did have dbxtool and a decent version of make to help us create the applicaitons we wanted. Usenet groups had plenty of SunView programming knowledge and the documentation as well as the APIs seemed quite reasonable. I think the first set of machines I started on were Sun 3/260 boxes along with 3/50 and 3/60 boxes. These machines had the good old Motorola 68K processors in them and had resonable speed (OK not the 3/50 but then with only 4MB of memory and booting and swapping over the network you couldn't really expect it to). Most of the development was done on the 3/260 because it had the most amount of memory and a color display which was kind of important to draw good looking maps.

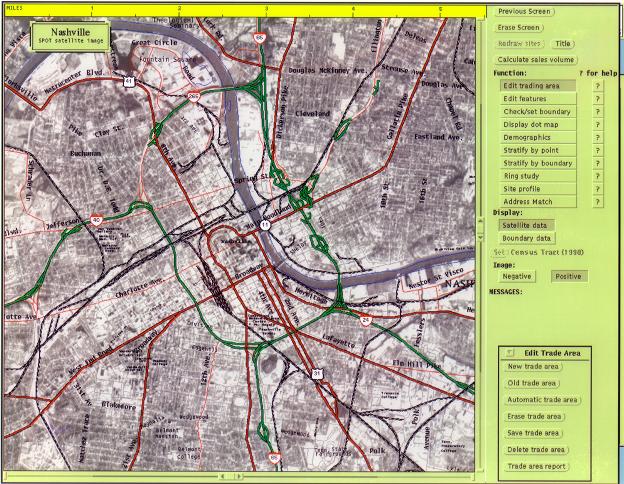

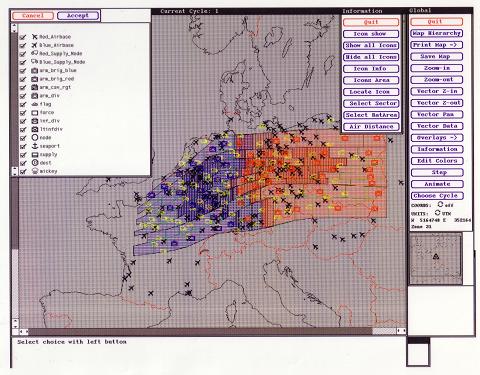

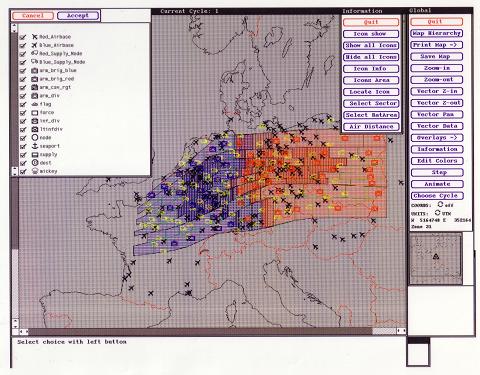

So one of the first applicaitons we did was put a little GUI front-end on the output of a model run. Show a map of the area, show little icons denoting forces, airbases, and what all, have an inidcatior allowing you to move forward in time of the model run and be able to click on icons to bring up information about them. Here's a sample picture (Please Note: Any and all images associated with the work I did were all generated from unclassified SAMPLE data! I never had access to anything else.)

As you can see there are coastlines and some rudimentary country boundaries being drawn along with the icon overlays. At this point the idea of how to build all this seemed fairly simple. But there were still two significant problems. The first was that we wanted the icons on the display to be animated and we didn't have a high-end graphics board in any of the machines at the time. The second, and far more limiting issue, was that none of us had any background whatsoever in the field of GIS! What is the difference between a projection, datum, or a coordinate system? There was plenty to learn!

First thing we had to do was settle on a projection and after reading up on a number of them we chose the Mercator projection because it was simple and it looked good when you were zoomed in a reasonable amount. It wasn't until quite a bit later that we learned about all the headaches with the aforementioned projections, datums and coordinate systems though we did support UTM fairly early on (as depicted above). Now we had to figure out how to draw the maps on screen. The early Sun framebuffers were 8-bit colormapped(indexed color) displays that allowed us to load the colors we wanted. But to animate the red and blue forces we had three choices.

Only one problem. Our sponsor was coming to visit and we were giving a high profile demonstration of all our work to them. This wasn't working. Something in the input files was causing the application to bus error. Not segmentation fault, bus error! Major bummer! It is now the morning of the demo and our sponsor is there. The only machine(s) that can run the program are in the room that all the demos are going to be done in. I'm up about 2 hours in. I go sit down in the demo room and I sit down at the only other machine that can run the application. It is immediately to the right of the demo machine. I grab the monitor and pull it as far right as possible and start debugging with dbxtool! The demos start and after the first one completes Bob shoots me a knowing look with raised eyebrows. Done yet? I just shake my head no. I keep debugging. The sponsor looks over at me a couple of times. I slide my chair a bit further to the right (the room was small) and pull the monitor even more to the right. I keep debugging. About 15 minutes before I'm up I figure it out. I can't recall the exact problem but I do recall it was a stupid problem and wanting to blurt out an obscenity as I figured it out. I barely contained myself.

The demo went off without a hitch and more $$ kept flowing. Looking back on it I can't help but laugh at the experience. There were more like that. I had the pleasure of working with some great folks in our military during this time both at the Pentagon and at US Central Command. Stories for another time but I can't leave without giving you a hint of one of those stories. It revolves around the 13th and 14th octets of an Ethernet packet and US Central Command.

Sun Framebuffer History

Segmentation Fault vs. Bus Error why a bummer?

OK, that bit of soap box behind me:

I actually started working with maps back at ANL when I joined the Visual Interfaces Section. Our little part of ANL wasn't like you would think of a government lab. We weren't handed money from a line item that made it into the US Budget. Nope, we were almost exactly like consultants. So we went out in search of organizations within the government, DoE and DoD, that had line item funding and were looking for how to spend it.

Enter our group, which convinced a number of folks that the new affordable computer craze (Sun workstations were starting to be seen as reasonable computing platforms) could help them visualize the reams of tabular data output from their models into more concise visualziations on maps. The military had models like TACWAR and AEM that took many input files and generated a model of a conflict between forces. Our idea: Put icons on maps and let you click on the icons and present the tabular information in windows that popped up as a result of those clicks. Cool!

Back in those days our development environment consisted of running SunView and using either vi in a shell window or textedit. Sad to say I had not yet been exposed to Emacs and the benefits of integrated GDB debugging. However Sun did have dbxtool and a decent version of make to help us create the applicaitons we wanted. Usenet groups had plenty of SunView programming knowledge and the documentation as well as the APIs seemed quite reasonable. I think the first set of machines I started on were Sun 3/260 boxes along with 3/50 and 3/60 boxes. These machines had the good old Motorola 68K processors in them and had resonable speed (OK not the 3/50 but then with only 4MB of memory and booting and swapping over the network you couldn't really expect it to). Most of the development was done on the 3/260 because it had the most amount of memory and a color display which was kind of important to draw good looking maps.

So one of the first applicaitons we did was put a little GUI front-end on the output of a model run. Show a map of the area, show little icons denoting forces, airbases, and what all, have an inidcatior allowing you to move forward in time of the model run and be able to click on icons to bring up information about them. Here's a sample picture (Please Note: Any and all images associated with the work I did were all generated from unclassified SAMPLE data! I never had access to anything else.)

As you can see there are coastlines and some rudimentary country boundaries being drawn along with the icon overlays. At this point the idea of how to build all this seemed fairly simple. But there were still two significant problems. The first was that we wanted the icons on the display to be animated and we didn't have a high-end graphics board in any of the machines at the time. The second, and far more limiting issue, was that none of us had any background whatsoever in the field of GIS! What is the difference between a projection, datum, or a coordinate system? There was plenty to learn!

First thing we had to do was settle on a projection and after reading up on a number of them we chose the Mercator projection because it was simple and it looked good when you were zoomed in a reasonable amount. It wasn't until quite a bit later that we learned about all the headaches with the aforementioned projections, datums and coordinate systems though we did support UTM fairly early on (as depicted above). Now we had to figure out how to draw the maps on screen. The early Sun framebuffers were 8-bit colormapped(indexed color) displays that allowed us to load the colors we wanted. But to animate the red and blue forces we had three choices.

- Use double buffering

- Our graphics card did not support double buffering :(

- Use XOR technique

- Unfortunately this causes colors to go all "disco" on you as something is drawn over something else and moves. It also presents somewhat of a problem when many things are moving at once.

- Draw as fast as you can

Only one problem. Our sponsor was coming to visit and we were giving a high profile demonstration of all our work to them. This wasn't working. Something in the input files was causing the application to bus error. Not segmentation fault, bus error! Major bummer! It is now the morning of the demo and our sponsor is there. The only machine(s) that can run the program are in the room that all the demos are going to be done in. I'm up about 2 hours in. I go sit down in the demo room and I sit down at the only other machine that can run the application. It is immediately to the right of the demo machine. I grab the monitor and pull it as far right as possible and start debugging with dbxtool! The demos start and after the first one completes Bob shoots me a knowing look with raised eyebrows. Done yet? I just shake my head no. I keep debugging. The sponsor looks over at me a couple of times. I slide my chair a bit further to the right (the room was small) and pull the monitor even more to the right. I keep debugging. About 15 minutes before I'm up I figure it out. I can't recall the exact problem but I do recall it was a stupid problem and wanting to blurt out an obscenity as I figured it out. I barely contained myself.

The demo went off without a hitch and more $$ kept flowing. Looking back on it I can't help but laugh at the experience. There were more like that. I had the pleasure of working with some great folks in our military during this time both at the Pentagon and at US Central Command. Stories for another time but I can't leave without giving you a hint of one of those stories. It revolves around the 13th and 14th octets of an Ethernet packet and US Central Command.

Sun Framebuffer History

Segmentation Fault vs. Bus Error why a bummer?

Found an Image

Friday, April 21, 2006

Quintillion

So where did this McDonald's Quintillion come from? How did I get involved?

Second question first. I got involved because during that time, circa 1991, because I was working at Argonne National Laboratory and my immediate supervisor, Bob (all names will be first name only), happened to have a friend, Gary, who worked in the Marketing department at McDonalds. Now earlier in 1991 Bob (Smart Adult), David (Smart Kid#1), and I (Smart Kid #2) started a company together (We were later joined by Smart Kid #3 Gordon). Gary was looking for help in creating a mapping system to do analysis of existing stores and determination of where new stores should go. Oh... Did I mention that we spent the previous 3 years working on mapping systems for the DoD at ANL?!? (That is a bunch of stories for another time). I'm pretty sure that had some bearing on Gary thinking we could help him create the software he wanted. So one thing lead to another and next thing you know we are working for McDonalds on what was to become Quintillion.

First question: Where did it really come from though?

Well Quintillion, as it is known today, was really two different products. There was Impact: the executable that was responsible for determining what impact a placement of one store would have on the trade areas of other existing stores and Speedy: the application that did geographic analysis of existing stores. Look at a map point and click and get lots of information about what you clicked on.

Here are a couple examples of what I'm talking about, see the link below for a more detailed view. On the left you see what I think were impact rings and dot maps of where customers came from and how far they traveled. This information came from surveys done in-store and then feed into the system.

Here are a couple examples of what I'm talking about, see the link below for a more detailed view. On the left you see what I think were impact rings and dot maps of where customers came from and how far they traveled. This information came from surveys done in-store and then feed into the system.

On the right you see one of the cooler comparison tools that we (The royal we. I don't recall whose idea this actually was.) came up with. It allowed us to compare how two stores were doing in comparison with some other computed metric. It made it very easy to overlay stores and see how they matched up in key metrics and what made a store good or bad.

see one of the cooler comparison tools that we (The royal we. I don't recall whose idea this actually was.) came up with. It allowed us to compare how two stores were doing in comparison with some other computed metric. It made it very easy to overlay stores and see how they matched up in key metrics and what made a store good or bad.